The Centre for Intelligent Mobile Systems (CIMS)

Short description

The Centre for Intelligent Mobile Systems was established in partnership with BAE Systems with the aim to undertake research and representative experimentation in the area of intelligent systems, to mature technology relevant to BAE Systems in the current and future defence and security sector.

Leader

Personnel

Researchers:

Salah Sukkarieh

Hugh Durrant-Whyte

Thierry Peynot

Bertrand Douillard

Teresa Vidal-Calleja

Tim Bailey

Mitch Bryson

Research Students:

Zhe Xu

Chris Brunner

Peter Morton

Alastair Quadros

Mark DeDeuge

Abdallah Kassir

Gabriel Agammenoni

John Vial

Lachlan McCalman

Research Engineers:

Vsevolod Vlaskine

Mark Calleija

Cedric Wohlleber

Laura Merry

International Visitors:

Giulio Reina (University of Salento, Italy)

Annalisa Milella (Institute of Intelligent Systems for Automation, Italy)

Noah Kunzt (Drexel University, USA)

Marie Amiot (Paul Sabatier University, France)

Mathieu Bernou (Paul Sabatier University, France)

Hua Mu (National University of Defense Technology, P.R. China)

Goals

The primary objective of CIMS has been to develop new algorithms and technology for intelligent sensing, robust perception, navigation and cooperative control, for applications including surveillance, tracking and picture compilation or semantic scene understanding, with a focus on solutions that are appropriate in an urban context.

The goals of CIMS are:

- To conduct fundamental research towards robust perception of urban environments

- To perform real world implementation, experimentation and validation of this research on real hardware operating in complex, representative urban environments

- To exchange personnel between BAE Systems and the ACFR to facilitate the transfer of knowledge

- To position BAE Systems to take a leading international role in the future of defence and security systems.

Start and end dates

- Start - February 2009

- End – February 2012

Long description

The Centre for Intelligent Mobile Systems was established in partnership with BAE Systems with the objective of undertaking fundamental research for Intelligence, Surveillance, Tracking and Reconnaissance (ISTAR) applications in urban environments.

To meet these objectives, CIMS is divided into five core research programs:

Urban Perception:

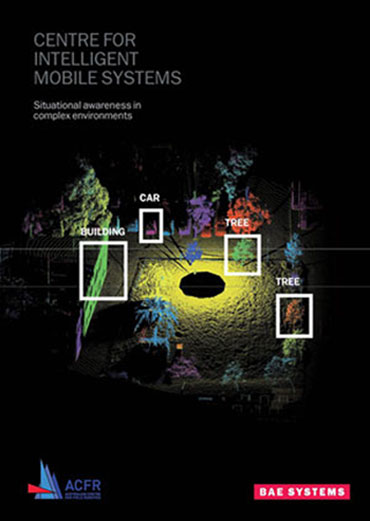

Towards building a digital 4D (spatio-temporal) representation of complex urban environments in real-time. This requires simultaneously performing tasks including mapping, segmentation, tracking and classification, from a wide variety of sensors incorporating vastly different sensor modalities and perspectives.

Persistence:

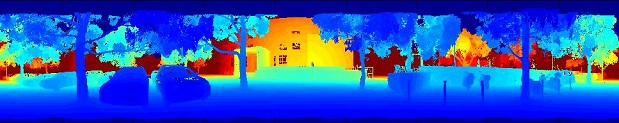

For improving the reliability and integrity of sensor and perception systems, with the aim of developing methods to detect, identify and mitigate perception failures, particularly in realistic adverse environmental conditions, including rain, fog, dust or smoke.

Cooperative Control:

To close the loop around high level perception systems, by optimally controlling a team of mobile sensor platforms and stationary nodes to actively acquire the best information with respect to the perception requirements, to maximise the utility of the digital representation of the environment.

Cooperative Localisation:

To provide a framework in which teams of mobile agents can share localisation information, enabling a joint estimate for the whole team. This enables improvements in localisation accuracy and endows platforms with navigation capabilities that would not be feasible when using their sensors alone.

All Source Localisation:

To provide a flexible localisation system that can fuse information from a large variety of sensor sources, including GPS/INS, velocity from GPS carrier phase (TDCP), signals of opportunity (3G, digital audio broadcast), visual odometry and LIDAR based SLAM.

Experimental Demonstration System:

To ensure that all of the research conducted by CIMS is deployed on real hardware for testing and validation in realistic conditions, a world class pair of mobile sensor platforms has been developed. Each unmanned ground vehicle platform carries a wide variety of sensors, which is critical to support the research in robust perception in urban environments. The sensor suites include:

- DGPS/INS

- Vision (monocular, three camera stereo, panospheric cameras)

- Thermal infrared

- 2D LIDAR

- 3D LIDAR

- 2D Scanning FMCW RADAR

Outcomes/results

The centre has been very successful in its core objectives of developing fundamental new research in the areas outlined above, resulting in 25 peer reviewed publications to date and 30 patents applications for the University of Sydney and BAE Systems.

CIMS has successfully deployed much of this technology as working prototype solutions, capable of running reliably and in real-time, enabling CIMS research publications to be backed by real world experimentation in representative urban environments. This technology has been demonstrated to BAE Systems here in Australia and in the United Kingdom, at BAE sites in Bristol and an exhibition in London.

Funding

BAE Systems UK

Publications

- Towards Reliable Perception for Unmanned Ground Vehicles in Challenging Conditions

- Towards Discrimination of Challenging Conditions for UGVs with Visual and Infrared Sensors

- Comparison of Opportunistic Signals for Localisation

- Laser-Camera Data Discrepancies and Reliable Perception in Outdoor Robotics

- Hybrid Elevation Maps: 3D Surface Models for Segmentation

- Visual Metrics for the Evaluation of Sensor Data Quality in Outdoor Perception

- Perception Quality Evaluation with Visual and Infrared Cameras in Challenging Environmental Conditions

- A Pipeline for the Segmentation and Classification of 3D Point Clouds

- On the Segmentation of 3D LIDAR Point Clouds

- Decentralised Control of Robot Teams with Discrete and Continuous Decision Variables

- Decentralised Cooperative Localisation for Heterogeneous Teams of Mobile Robots

- Short-Range Radar Perception in Outdoor Environments

- Combining Multiple Sensor Modalities for a Localisation Robust to Smoke

- Combining Radar and Vision for Self-Supervised Ground Segmentation in Outdoor Environments

- Radar-Based Perception for Autonomous Outdoor Vehicles

- Visual Metrics for the Evaluation of Sensor Data Quality in Outdoor Perception

- Can a 3D Classifier Be Trained Without Field Samples?

- An evaluation of dynamic object tracking with 3D LIDAR

- Scan Segments Matching for Pairwise 3D Alignment

- An Occlusion-aware Feature for Range Images

- Sensor Data Consistency Monitoring for the Prevention of Perceptual

Failures in Outdoor Robotics - Integrated probabilistic generative model for detecting smoke on visual images

- Learning utility models for decentralised coordinated target tracking

Videos

Dynamic 3D Perception: Outdoor Tracking

This video shows the segmentation and tracking of dynamic objects in an outdoor environment, using Velodyne 3D LIDAR data.

In the first scenario, two people run in a loop to demonstrate the tracking. In the second scenario, an example is shown where an object is thrown, to demonstrate the fidelity of the real-time scene segmentation and tracking performance.

Dynamic 3D Perception: Active Outdoor Tracking

This video shows the perception pipeline, whicih comprises ground/object classification, object segmentation, dynamic object detection and tracking, information prediction and autonomous vehicle control.

The robots control their own actions fully autonomously with no user intervention. The system predicts how much information the robots would obtain by moving in different ways and selects the action that will lead to gaining the most information for the purpose of multi target tracking.

A combination of 2D SICK and 3D Velodyne LIDARs are used for sensing. In the first scenario, Mantis (using the 180 degree field of view SICK LIDAR) can only see forwards. It can only respond to targets once they are in its field of view. In the second scenario, information is shared from Shrimp (using the 360 degree field of view from the 3D Velodyne LIDAR), allowing Mantis to react to a new target instantly, even though it hasn't yet seen it for itself. Finally, both Shrimp and Mantis share information and autonomously position themselves to track multiple targets optimally.

Dynamic 3D Perception: Indoor Tracking

This video shows the segmentation and tracking of dynamic objects in a cluttered indoor environment, using Velodyne 3D LIDAR data and the projection of that data into a video camera.

Dynamic 3D Perception: Indoor Object Tracking

This video shows the segmentation and tracking of dynamic objects in a cluttered indoor environment, using Velodyne 3D LIDAR data and the projection of that data into a video camera.

Decentralised, Coordinated Target Tracking with Autonomous MAVs

A video demonstrating decentralised coordinated target tracking with multiple autonomous Micro-Air Vehicles (MAVs). The MAVs are autonomously controlled by a decentralised network of ground-based computers, and track multiple simulated targets with bearings-only sensors. The MAVs are restricted to tracking one target at a time. The objective is to position the MAVs to maximise the information they gather about the targets in the sense of reducing the position uncertainty. Two approaches are presented; implicit coordination where each MAV greedily selects its best target, with coordination arising from shared target information, and explicit coordination where the team jointly decide on the best action. Our explicit approach allows the joint team decision, which comprises of a mixture of discrete (i.e. which target a MAV should track) and continuous (i.e. how a MAV should move) variables, to be solved in a decentralised manner.

Event graphs for Velodyne based tracking

3D Shape Analysis for Object Recognition: Visualisations

Rendezvous Park - down - part I

On the Segmentation of 3D LIDAR Point Clouds ICRA 2011

This video accompanies the ICRA2011 publication, "On the Segmentation of 3D LIDAR Point Clouds"

The segmentation results produced by the algorithms described in this work are shown in this video.